Data Operations Demystified: mastering 7 core principals

Last week I have attended an Enterprise Data World summit.

This is one of my favourite summits as they always have a broad choice of sessions on any level for data professionals. Unfortunately, due to work arrangements, I was only present on the second day of the summit.

The first session's original title was “Accelerating Data Management with DataOps” but the speaker didn’t show up. Summit organizers have asked another speaker, Doug Needham from DataOps.live, to fill up and he made up a replacement session just in a few minutes before the session was supposed to start.

That takes a lot of knowledge of the topic and courage to stand up for the disappointed audience that was expecting someone else.

Doug is a senior data solutions architect and was doing Data Ops long before the term was invented. This amazing hour was full of exciting aspects of data operations, examples from Doug’s own rich experience and an overview of the Data Ops product his company is working on.

I have very much enjoyed the session and summarised some of my takeaways as I always try to do.

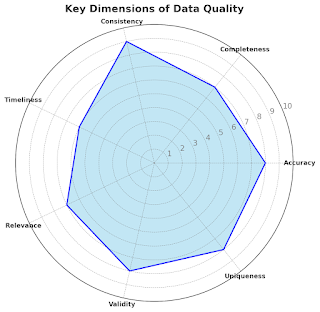

Data Ops is a set of practices that aims to improve collaboration between data engineers, data analysts and other people who are managing and utilizing the data based on 7 core principles:

When raw data is loaded into data warehouse without any major transformations, you can easily script changes and transformations in the later pipeline stages and apply them as data-as-a-code format.

- Agility, CD/CD and Data-as-A-Code principles

CI/CD and Agility contribute to efficiency, reliability and adaptability of DataOps processes and speed up development, deployment as well as data products consistency and quality.

Data-as-a-code is a concept that extends software development principles to the data managemnt and data pipelines development. It emphasizes treating data infrastructure, pipelines, and operations in a manner similar to how software code is developed, versioned, tested, and deployed.

- Components design

Design pipelines with modularity in mind and using DAGs ( Directed Acyclic Graphs) to break down complex pipelines into manageable tasks.

- Environment management

Examples can be column definitions reused in multiple tables, utilizing 0 time data copy features for setting up testing and development environments

- Governance

Automated change controls and utilizing security principals, maintaining metadata repository/ data catalog to track information about data sources, transformations and lineage.

- Automation.

The more you can automate, the better. Data Environment Configurations, Data Testing (having as many as possible data validations on columns and on relationships between columns) and Data Flows deployment automation.

- Collaboration & Self-Service

Rely more on Git on all data objects development rather than using 3rd party tools to compare environments and figure out the changes.

Data Ops is not about the technology, it's more set of principles and if you incorporate the above principles into your Data Operations, you can proudly say that you produce agile data that your customers can trust.

Comments

Post a Comment