The Greatest Reasons to use or not to use a Centralized Data Access Architecture

When developing a modern Data Platform Layer, one of the main decisions is whether to opt for centralized or decentralized data access architecture.

There is no “one-fits-all” solution, both have advantages and disadvantages.

A Centralized Data Access Architecture would usually mean duplicating data from the operational layer into the analytical layer and applying various transformations to data to support and speed up data analytics.

- Operational online transaction processing Layer, where all microservices and their operational databases are located.

- Analytical Data Layer, where we would have data lakes that support data Scientists' work and a data warehouse, that supports Business Intelligence.

- Transformations, ETL or ELT data pipelines, which are moving data from the operational layer into the analytical layer.

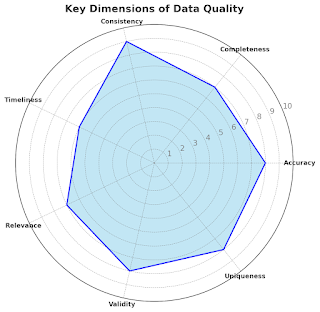

- CONSISTENCY: consolidating data into a central location can help data accuracy and reliability by having a single source of truth and aligned data modelling standards.

- PERFORMANCE: having all data in one place improves direct access query performance.

- GOVERNANCE: easy monitoring and controlling data access

- DEMOCRATIZED DATA ACCESS: users will access a singular data store and singular technology

- COST: All domains’ data would be duplicated from operational to analytical layers.

- DATA LATENCY: This is a fragile architecture that requires centralized responsibility for the Data Pipelines. Data pipeline might take some time to move the data, depending on how many transformations are applied on the way and also failures can lead to big delays.

- MISALIGNMENT between data producers and ingestion team: Operational layer data changes when not communicated to the Pipeline team can lead to pipeline outages.

- COMPLEXITY and TIGHT COUPLING: The more data grows, the Data pipelines eventually become a complex labyrinth that is difficult to maintain. Stages of pipelines are linearly dependent, new features might require changes in multiple pipeline components which might break down data quality or other pipelines or slow down deployments.

- DATA QUALITY: since there is no central ownership of operational and analytical layers, data quality suffers, and data engineers lack domain expertise to understand the nuances of how data is produced and how data is consumed upstream.

- HARD TO SCALE: scaling a huge system is hard (although cloud data warehouses make scaling a piece of cake)

Does this list sound familiar? Doesn't it sound similar to the monolith vs microservices arguments?

Splitting business responsibility into separate components that can be separately developed and managed and scaled makes perfect sense also in the Data Architecture world.

Subscribe to see upcoming blogs on decentralized data architectures.

.png)

Comments

Post a Comment