How Data Mesh architecture and Data Catalogs help decentralized data teams.

Not too long ago, Data Administrators had to change their long habit of having a monolith database.

They were forced to accept and agree to the Polyglot persistence - the developer's teams have started to choose different data storage and technologies that would support each application team's data model requirements.

The time has arrived to break down also the Data Lake monolith paradigm.

Refactoring monolith Data Lake makes a lot of sense.

The central data lake as well as the central data team is often a huge bottleneck. The central data team is usually busy with fixing broken data pipes and taking care of constant data changes made by the domain owners/development teams.

Data Mesh architecture is coming to the rescue here. Instead of a centralized data team, there would be multiple decentralised domain data teams, producing data sets or consuming other teams' data sets. Domain data team usually knows their domain data very well and are aware of any changes that are happening.

- Data Metadata and Lineage,

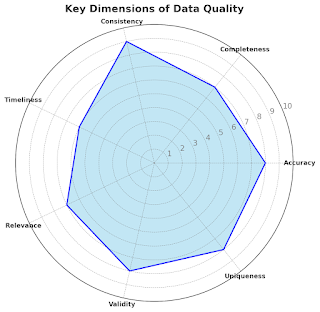

- Data Quality,

- Data Freshness

- Data Availability.

Data Catalog is an organized centralized data assets inventory in the organization that would discover Data Assets and their metadata across data domains.

Data Catalogs will answer questions, similar to "Where is this data living?", "Who is the data set owner?", "How often is this data set updated?", "Where is this data coming from?", "Which datasets is this dashboard taking data from?", "How the data is being used"

Data Catalogs would enable the domain teams and data analysts to find relevant data assets, enforce common business vocabulary, and ensure data freshness and correctness.

Which Data Catalog products are you using in your organization?

Comments

Post a Comment